tl;dr

MSBuild’s OutDir parameter must be of the form:

/p:OutDir=C:\folder\with\no\spaces\must\end\with\trailing\slash\

…or of the form:

/p:OutDir=”C:\folder w spaces\must end w 2 trailing slashes\PS\this makes no sense\\”

I have written a self-contained PowerShell function to handle OutDir’s mini-language that exists because…I don’t know why, because they hate us? Anyway, the script is all the way at the bottom. PS “backwards compatibility” is code for “we hate you,” in case you get “backwards compatibility” as the reason OutDir’s syntax is so hostile on your Connect issue you filed so diligently. That’s also a trick, because you’re not supposed to file Connect issues.

I hate you, OutDir parameter

Okay, so the post title is unhelpful. Deal with it. I’m in pain, and a suffering man should be afforded some liberties. I’m like Doc Holiday—minus tuberculosis, plus build script duties. Or the whooping cough. I didn’t pay much attention during Tombstone, but he did cough a lot. Could be parasites.

Build script duties are some of the worst, alongside SSRS reporting duties, SharePoint integration duties, auditor-friendly deployment documentation duties, or any combination of those three. I don’t know what IT auditors do for fun—I simply can’t imagine. I don’t know if they can either. Think about it.

…back to build scripts. A bad build script will kill your chances of getting any kind of an automated deployment working, and if you can’t do builds or deployments well, you end up editing your production web.config in production and writing Stored Procedures because deploying code is just so painful. And then no one wants to deploy because it takes about three weeks and seventeen tries before you get it right, and no one’s writing any sort of automated tests around your stored procedures (except that one guy who’s waaay to excited about T-SQL, but he writes try/catch blocks in T-SQL and is pushing for Service Broker, so…can’t trust him), and this has all kinds of implications, and then all of a sudden exclusive checkouts sound like a good idea, and you wake up one morning and you’re doing Access development. Again(!!!). Except less productive. And your customers don’t trust you, and then one day you’re just fired outright, and the next day you’re on the street, and then finally, out of options, you reach the lowest low—you develop and release an app on the iTunes app store. Lowest of the low. Can’t possibly get worse, unless you’re forced to write code in Ruby, which requires you join the Communist Party, as is clearly written in the AGPL (yes, this is why Microsoft wrote their own GPL—they’re fighting both terror and communism, and socialism—one license agreement at a time). This is why you read the EULA. Communism is why.

Anyway, MSBuild’s OutDir parameter isn’t making my build script duties any easier.

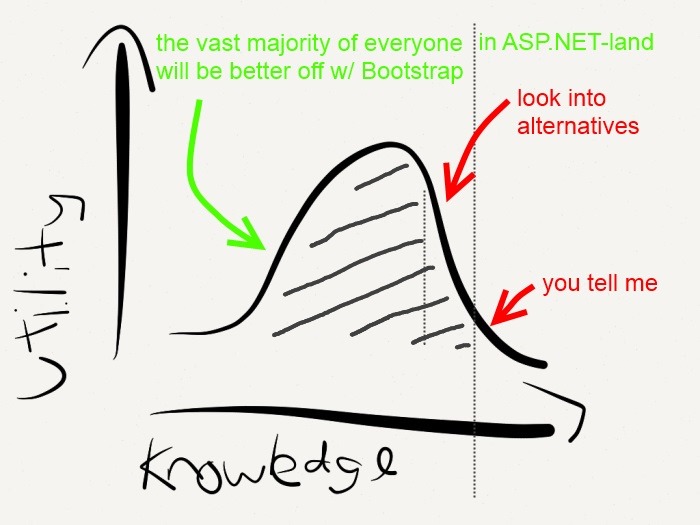

Regarding OutputPath

I tried researching OutputPath, but it looks like a different metaphorical universalist path up the same mountain named “appending 1 or more slashes to the end of everything for no reason”, so I gave up. When it comes to doing in-depth research on any framework, including, and today featuring MSBuild and its wonderfulness, you either find out that a) you were woefully ignorant all along and just needed that one tidbit of knowledge, with which you can SUCCEED, or b) you were unfortunately justified in distrusting your framework because your framework has FAILED you. After a few extremely painful episodes, I started giving up early and looking for a workaround, which turns out is what most people do anyway.

OutputPath smells like it has the same problems that OutDir has, so I just gave up on it and went with the workaround (below). I could be wrong about OutputPath. Blame SharePoint for my wariness.

But I’m not only here to complain

I’m here to complain, don’t get me wrong. Like a wounded Rambo provided with only fire, kerosene and his trusty serrated knife, I’m writing this post as a kind of Rambo shout before I pass out from the pain after cauterizing my wound the Rambo way. Life sucks*.

*not actually true

But I’m also here to let you know, hey, if you’re in the Cambodian jungle* with a bullet wound and you’ve got to do something, here’s what you do. Maybe you won’t bleed all over the flora and fauna** with your bullet wound in the Cambodian jungle as long as I did, maybe this post will help you along in your journey…whatever that journey is. It’s a journey of some kind. Let’s not stretch the metaphor too far. Wait, aren’t we talking about build scripts?

*I am not going to do any research, do not question or fact-check my Rambo knowledge. Just assume I got it right.

**it seemed like the right thing to say at the time

Why: A brief explanation why OutDir exists

Now, onto something resembling a technical blog post.

OutDir exists so that, when compiling a Project (e.g. “msbuild MyProject.csproj”) or Solution (e.g. “msbuild MyManyProjects.sln”), you can tell MSBuild where to put all the files. Or if you like fancy words, “compilation artifacts for your ALM as part of your SDLC”. You’re welcome. I’m SDLC certified 7-9 years experience, ALM 8.5 years, MS Word 13 years. Hire me, I’ve got an edge on the other candidate by 2.5 years SDLC and a whopping 9 years MS Word. Numbers can’t lie! Plus I’ve got 5 years OOP, 3 years OOA, 4.5 years OOD. You can’t argue with numbers.

Where were we? Ah, putting compilation artifacts in folders. Without OutDir, you don’t have that control.

Let’s take the simple example. “msbuild MyProject.csproj” will put MyProject.dll in the bin\Debug subfolder, just like compiling from Visual Studio. If you set the configuration to Release, ala “msbuild MyProject.csproj /p:Configuration=Release”, everything will be dumped into bin\Release. If you have no idea what’s going on and you make a third build configuration, e.g. “msbuild MyProject.csproj /p:Configuration=Towelie”, the files will be dumped in bin\Towelie.

You get the idea. By default, files go in build\$Configuration, whatever $Configuration happens to be at the time.

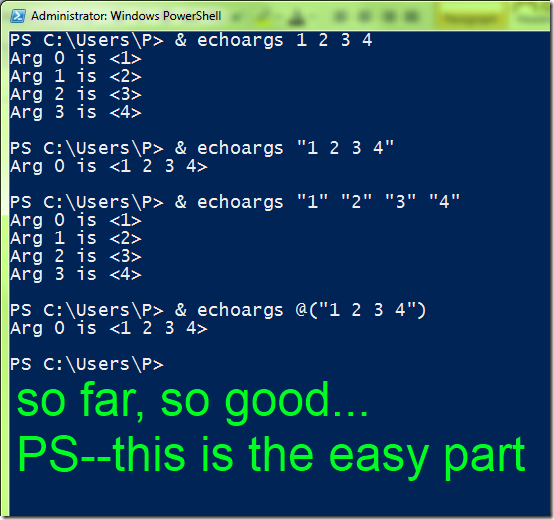

So here comes OutDir to shake things up. Let’s try a simple example:

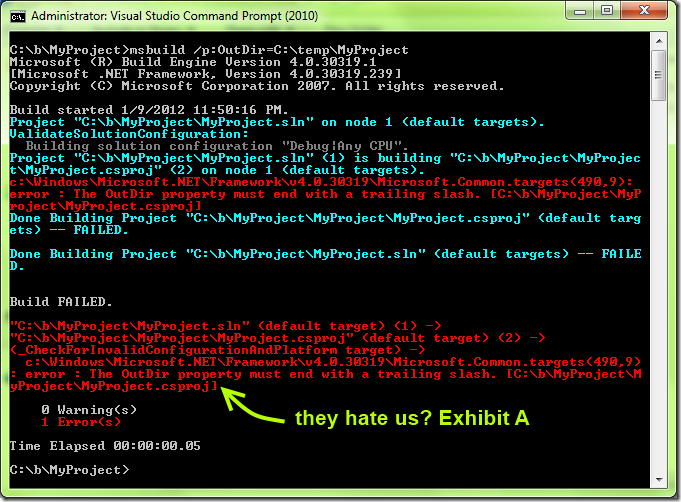

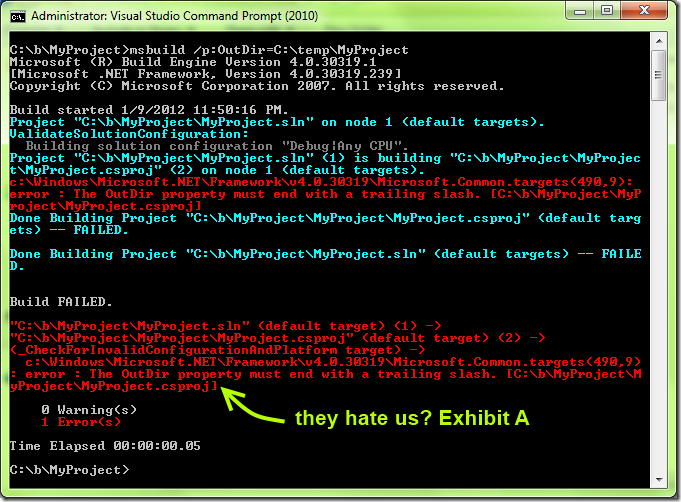

“msbuild MyProject.csproj /p:OutDir=C:\temp\MyProject”

Haha! Tricked you! This simple example doesn’t work! You forgot the trailing slash!*

*serious aside: would it have taken more effort to write and localize an error message in seven hundred languages including Bushman from Gods Must Be Crazy 2, or just accept the path without a trailing slash and fix it for us? I can’t imagine it would be harder to just scrub the input. I’m serious. I’m Batman voice serious. Seriously.

Okay, let’s try this again, but after paying the syntax tax:

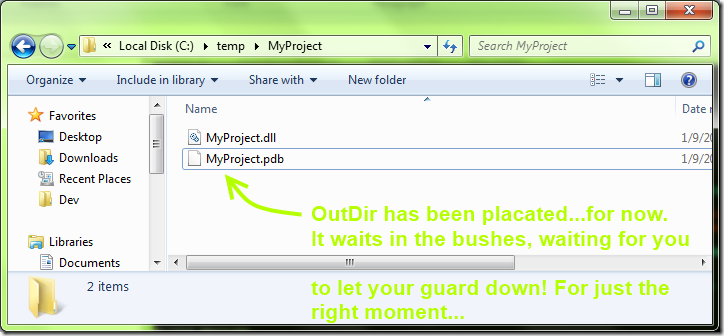

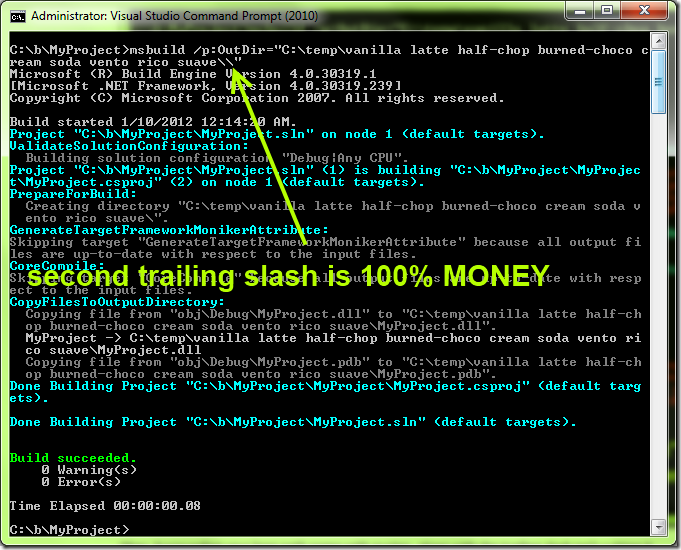

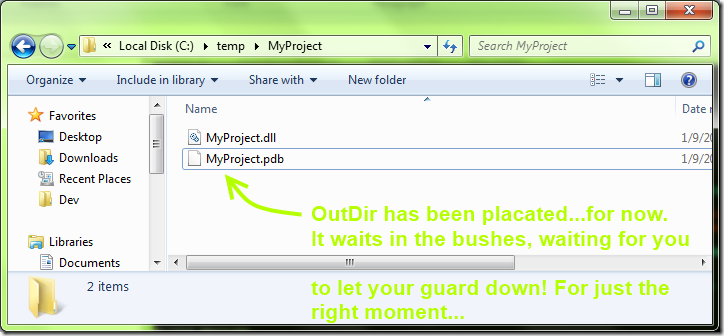

“msbuild MyProject.csproj /p:OutDir=C:\temp\MyProject\”

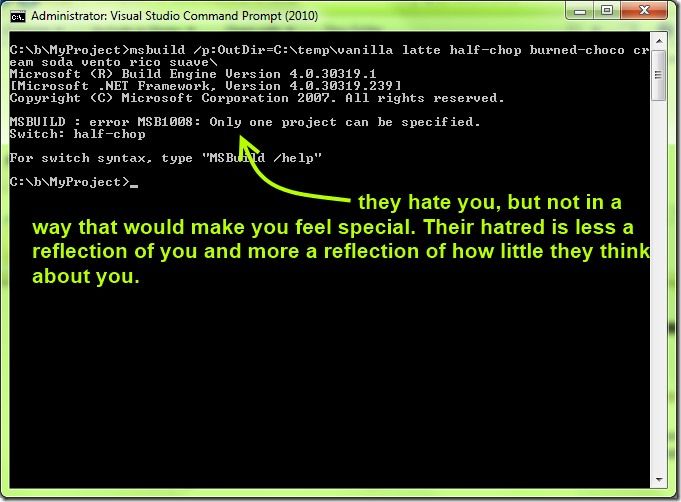

You get exactly one guess what happens. Okay, who cares, I’ll just show you.

So you get the idea.

A second example, this time illustrating the use of path names with spaces

Okay, first off, MSBuild’s OutDir parameter is only one of the many, many reasons that I dislike spaces in filenames, path names, even passwords. I mean passphrases. Of course I mean passphrases. Passwords are crackable. Passphrases are the way to go.

Don’t even get me started about Uñicode support.

Second, let me point out that I can work perfectly fine without setting OutDir. I know where my files go, and I know how to reliably copy files from bin\debug folders directly into production as part of my nightly build process (PS for the humorless, don’t try that). But, I need OutDir, because TFS’s default build definition uses OutDir whether you like it or not. And, in the course of setting up a working TFS 2010 build, at the time I needed to a) understand, and b) simulate TFS’s compilation process.

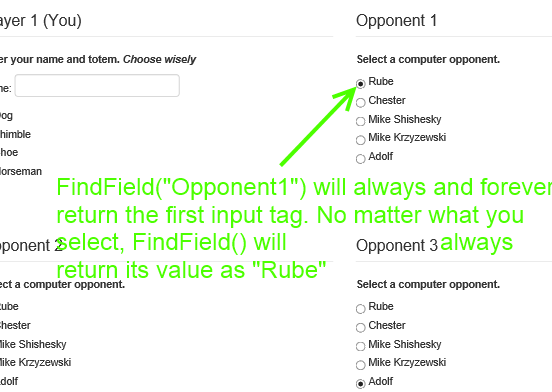

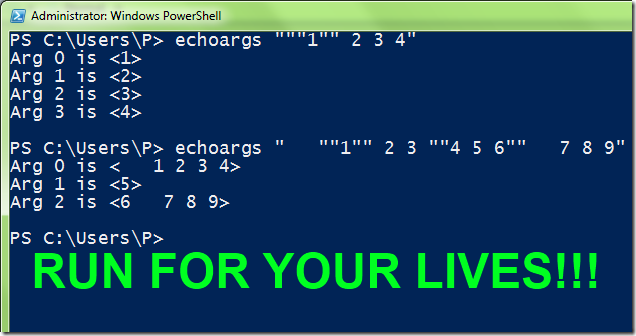

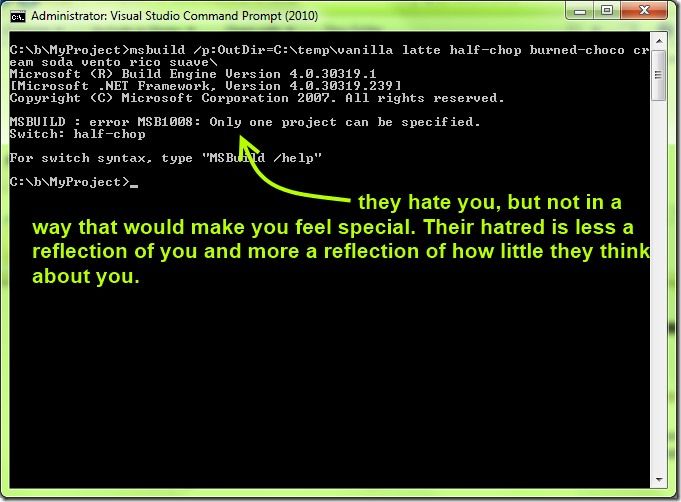

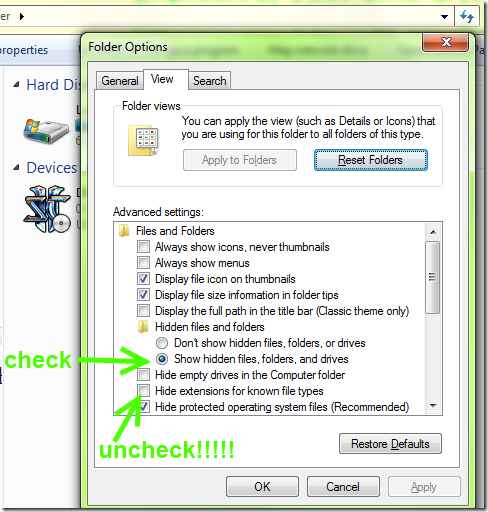

Anyway, some of our TFS build names have spaces in them, which means that some of the folder names have spaces in them, which means that my script that calls OutDir needs to handle folder names with spaces in them. Let’s try vanilla latte half-chop burned-choco cream soda vento rico suave way of calling OutDir and see what happens:

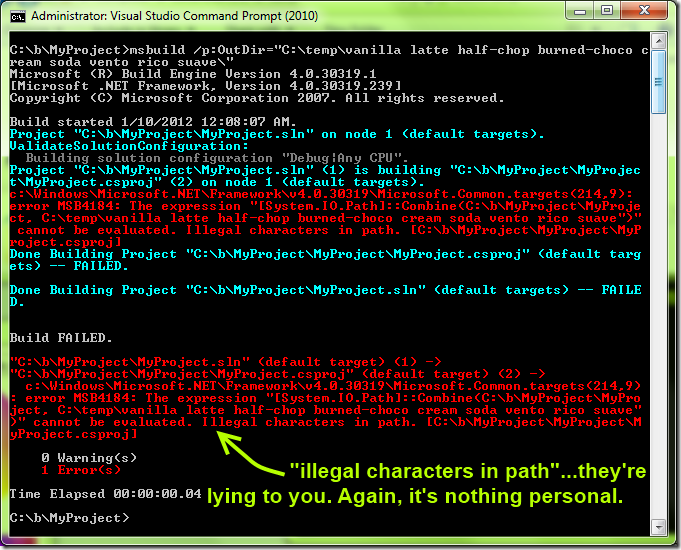

Okay, we cheated somewhat, because we didn’t even bother to surround our long path name with quotes. Rookie! Let’s try again:

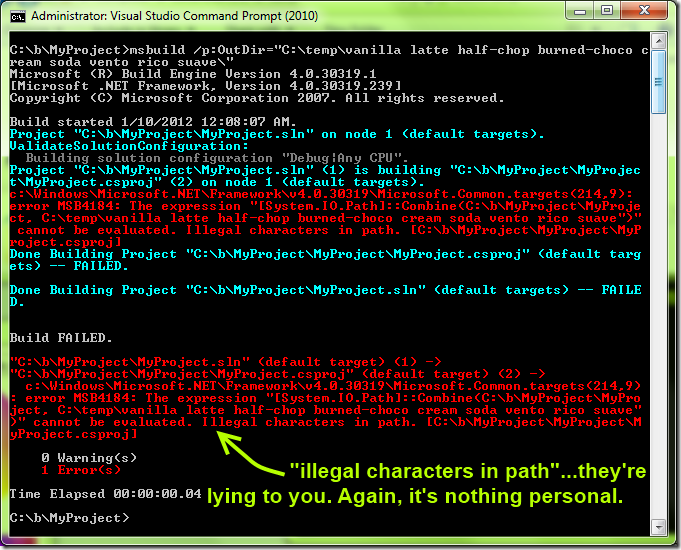

Okay. Surrounding your long path name with quotes, along with the trailing slash isn’t cutting it.

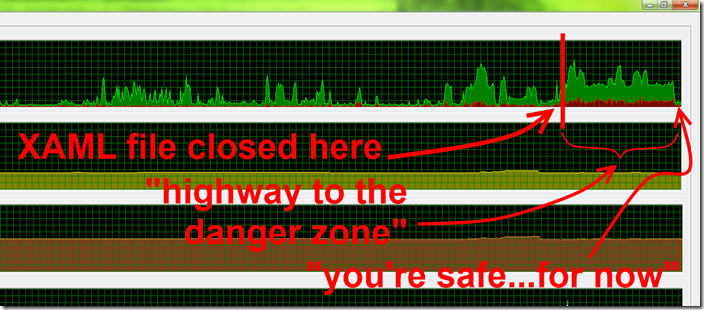

This “Illegal characters in path.” error message is where I’ve lost probably…let’s not estimate, my professionalism will be called into question. Anyway, let’s just say “a lot of time” was lost on this problem.

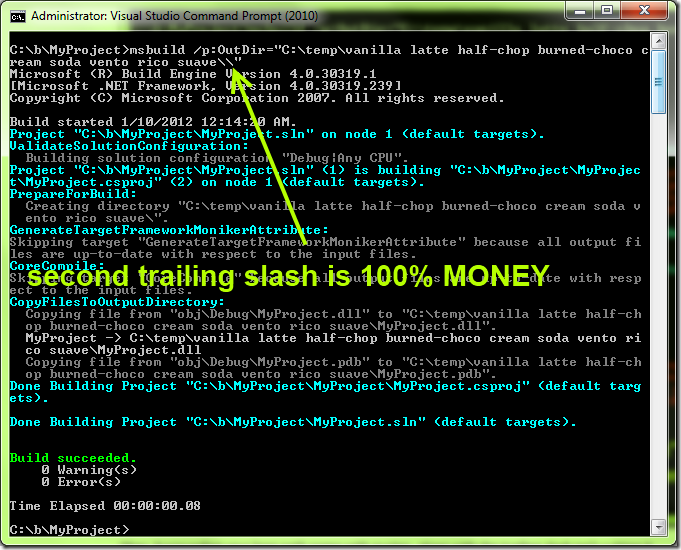

So here’s the solution:

I don’t know why, and at this point, I’ve lost the fighting spirit. It’s setting an output folder in MSBuild after all, I’m not exactly writing a new OS scheduler, though I have a vague idea that OS scheduling is not like Outlook scheduling, and my resume says I have 3.5 years of OS Scheduler experience, so I can speak to it.

Someone in the comments of this blog post suggested the double trailing slash solution, and what you do know it worked, and here I am much later writing a blog post that is way too long to justify this much effort.

Wrapping up what we’ve learned today, in bullet point form

- Doc Holiday has either TB or the whooping cough. Or parasites.

- They hate us:

- MSBuild’s OutDir parameter must be of the form:

/p:OutDir=C:\folder\with\no\spaces\must\end\with\trailing\slash\

- …or of the form:

/p:OutDir=”C:\folder w spaces\must end w 2 trailing slashes\makes no sense\\”

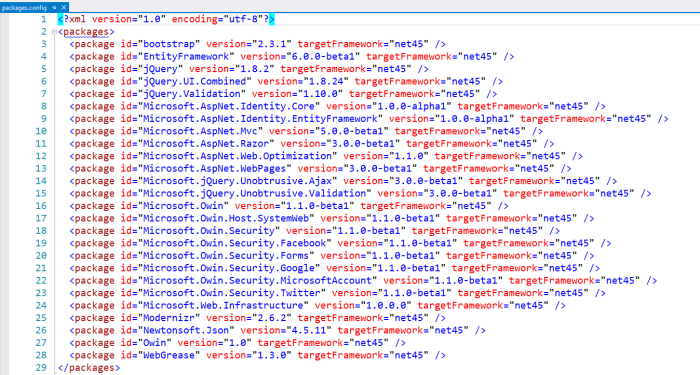

Wrapping up what we’ve learned today, in PowerShell function form

Enjoy. There’s almost nothing special about this. The value Run-MSBuild gives you is that it hides (or if we’re using the fancy words, encapsulates) the horrible rules OutDir imposes on us, freeing the caller to worry about, oh, I don’t know, writing an OS Scheduler.

Feel free to cut-and-paste. I’m not going to force you to join the Communist Party like the AGPL does.

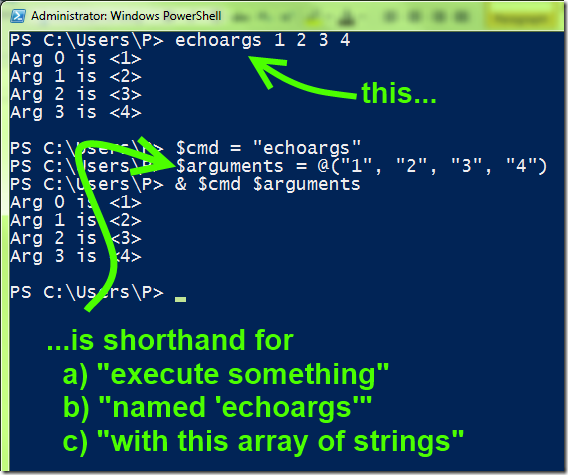

And do note the commented-out psake-friendly line. Psake’s Exec function exists to encapsulate the weirdness with executing DOS commands from PowerShell. I figure, if you’re calling MSBuild, chances are good you’re calling it from psake, but if not, here’s a script that will bubble up a reasonable error message to the user.

Psake or not, if you’re calling this PowerShell script from TeamCity, the error message will bubble up to the top. If you’re using TFS, follow these instructions to experience the joy that is visual programming (and yes, you’ll also get good error messages bubbled up to the top).

Also, this isn’t one of those bulletproof, general-purpose functions, what with proper types and default values for each argument, logging via write-verbose, a –whatif switch, documentation, and whatever else I’m ignorant of. Of. I don’t do that day-to-day for my PowerShell scripts. I just write what I need today, and maybe generalize what I have if I use the same function twice in a script. It’s not like sharing functions between PowerShell scripts is desirable. Like sharing needles. A discussion of the merits of needle sharing is a good way to wrap up a blog post. And on that note, here’s the script:

$msbuildPath = 'C:\windows\Microsoft.NET\Framework\v4.0.30319\msbuild.exe'

function Compile-Project($project, $targets, $configuration, $outdir) {

if (-not ($outdir.EndsWith("\"))) {

$outdir += '\' #MSBuild requires OutDir end with a trailing slash #awesome

}

if ($outdir.Contains(" ")) {

$outdir="""$($outdir)\""" #read comment from Johannes Rudolph here: http://www.markhneedham.com/blog/2008/08/14/msbuild-use-outputpath-instead-of-outdir/

}

#if you're calling this from psake, save yourself the trouble and use their "exec" command.

#psake:

#exec { & $msBuildPath """$project"" /t:$($targets) /p:Configuration=$configuration /p:OutDir=$outdir" }

#Vanilla PowerShell, non-psake:

& $msBuildPath """$project"" /t:$($targets) /p:Configuration=$configuration /p:OutDir=$outdir" 2>$msbuildErrOutput

if ($lastExitCode -ne 0) {

write-error "Error while running MSBuild. Details:`n$msbuildErrorOutput"

exit 1

}

}

Peter Seale's weblog

Peter Seale's weblog